Amazon.com, Inc. (NASDAQ:AMZN)-backed AI developer Anthropic is reportedly in a standoff with the Pentagon over how its artificial intelligence tools can be used.

The dispute centers on whether Anthropic’s AI models, which include safeguards to prevent harmful actions, can be deployed by U.S. military and intelligence agencies without restrictions, reported Reuters, citing people familiar with the matter.

As per the sources, Anthropic raised concerns that its technology could be used to target weapons autonomously or spy on Americans without human oversight.

Don’t Miss:

The U.S. government has “extensively used” Anthropic’s AI for national security missions and the company is engaged in productive discussions about ways to continue that work, an Anthropic spokesperson told the publication.

Anthropic did not immediately respond to Benzinga’s request for comments.

Pentagon officials, citing a Jan. 9 department memo on AI strategy, maintain that commercial AI should be deployable as long as it complies with U.S. law, regardless of corporate usage policies, the report added.

The standoff represents an early test of how Silicon Valley companies can shape the ethical deployment of AI in military contexts.

Anthropic CEO Dario Amodei this week warned in a blog post that AI should support national defense “in all ways except those which would make us more like our autocratic adversaries.”

The San Francisco-based startup is preparing for a potential public offering while investing heavily in U.S. national security partnerships.

The disagreement comes as Anthropic, along with other AI developers like OpenAI, Alphabet Inc.’s (NASDAQ:GOOG) (NASDAQ:GOOGL) Google, and Elon Musk’s xAI, navigates contracts with the Pentagon.

See Also: Private-Market Real Estate Without the Crowdfunding Risk—Direct Access to Institutional-Grade Deals Managed by a $12B+ Real Estate Firm

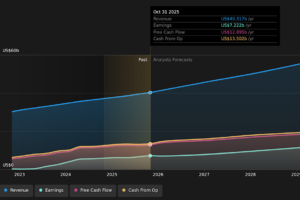

Anthropic has boosted its 2026 revenue projection by 20%, now expecting sales to reach $18 billion this year and $55 billion in 2027.