Microsoft-backed (MSFT, Financials) OpenAI is implementing new strategies to enhance its forthcoming large language model, code-named Orion, which reportedly shows only marginal performance gains over ChatGPT-4, according to a report by The Information.

Based on people acquainted with the circumstances, the research shows that Orion’s developments are less than those of past iterations, including the leap from GPT-3 to GPT-4.

The restricted availability of high-quality training data, which has already becoming more rare since AI developers have already handled most of the available data, is a major determinant of the stated slowing down in development. Orion’s training has so included synthetic dataAI-generated contentwhich forces the model to show traits akin to those of its forebears.

OpenAI and other teams are augmenting synthetic training with human input to help to overcome these constraints. Human assessors are assessing models by asking coding and problem-solving challenges, honing solutions via iterative comments.

OpenAI is also working with other companies such Scale AI and Turing AI to do this more thorough analysis, The Information said.

“For general-knowledge questions, you could argue that for now we are seeing a plateau in the performance of LLMs,” said Ion Stoica, co-founder of Databricks, in the report. We need factual data, and synthetic data does not help as much.

As OpenAI CEO Sam Altman pointed out earlier this month, computing power restrictions are even another obstacle for improving AI capabilities.

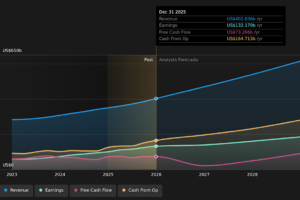

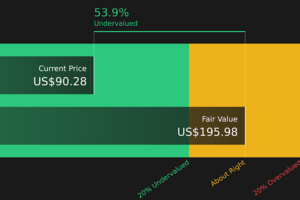

Microsoft, in its most recent fiscal first-quarter results, reported a net income of $24.67 billion, up 10.66% from the year before, and sales of $65.58 billion, a 16% rise year over year. At $3.30, earnings per share reflected a 10.37% increase.

This article first appeared on GuruFocus.