(Bloomberg Opinion) — Britain’s plans to regulate online content have triggered an unsurprising furor about censorship. But that’s largely fine with the technology giants – because it distracts attention from a bigger problem that goes to the heart of their business model.

The white paper on online harms, published Monday, is an effort to curb the online spread of dangerous content, such as incitement to terrorism. It proposed “substantial fines” on both companies and executives who have breached a “statutory duty of care” that would be enforced by a new regulator. As if that wasn’t enough, the regulator will “ensure the focus is on protecting users from harm – not judging what is true or not.”

It has produced an enormous outcry over free speech, including here, here and here. It’s important to discuss this. But let’s not get carried away. This is a white paper, not a bill, let alone an act. It asks questions, as well as answers them – the paper includes a list of 18 of them to guide participants in the consultation, which ends in July. And there’s no reason why the result need undermine free expression. Amnesty International, for one, offers clear parameters for the legitimate restriction of speech.

Still, if social media giants are going to be forced to make difficult calls with stiff penalties for getting them wrong you’d expect a bit of outrage from Silicon Valley. Particularly if the U.K. precedent is then followed by governments elsewhere around the world.

Instead, it’s crickets all around. Facebook Inc. itself has asked for these sort of rules – Chief Executive Officer Mark Zuckerberg did so in a Washington Post column last month. They will make his job easier. Rather than being beholden to the court of public opinion about what is or isn’t acceptable content, his responsibility will be to uphold rules specified by the government.

They’ll also provide a very useful distraction from something that should be an important focus for lawmakers: these tech behemoths’ business models. They make almost all their money from advertising, and the question is whether their reach has become anticompetitive.

The companies have created a growth cycle where more user engagement generates more advertising revenue, which funds new products, which attract more users, stoking more engagement, and so on. As a digital ecosystem expands to ensnare billions of people, engagement becomes the lodestar for lifting revenue.

Unfortunately for society, polarizing and untrue posts and videos are usually a surefire way of generating more engagement, as Buzzfeed journalist Craig Silverman demonstrated back in 2016 when he coined the term “fake news.”

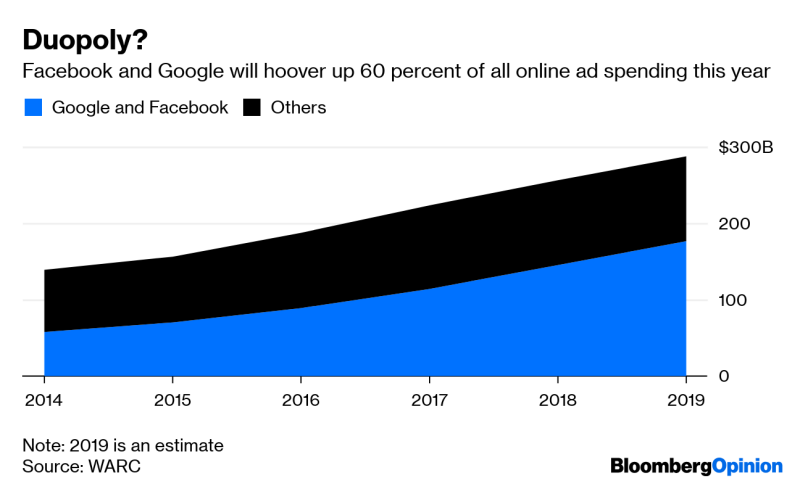

Sites such as Facebook and YouTube, which is owned by Google, are therefore rewarded financially for hosting posts and videos that may attract viewers – but may also cause harm. The link between engagement and revenue has helped them cement their dominance of the online advertising market.

Disentangling the relationship between engagement and revenue is a complex problem that doesn’t have an immediately obvious or proportionate solution. Until such a fix is found, the best stopgap is for governments to intervene to tackle harmful content.

But developing the process for regulating speech will be fraught with controversy. And it will distract from the crucial question of whether they control too much of the digital advertising industry, and how their businesses work. Facebook and Google’s advertising offerings resemble black boxes where money and ad campaigns go in, and prospective customers come out. Does this involve unfair competition? Are potential new entrants to the market being unjustly squeezed out?

The starting point for any conversation is a detailed look under the tech giants’ hood. But a heated debate on the practicalities of containing harmful online speech will unfortunately take precedence over a much-needed discussion about whether the companies should be subject to antitrust regulation.

Dipayan Ghosh has a better understanding of these issues than most, having worked as both an adviser on technology policy to the Barack Obama administration, and on public policy at Facebook. “Nothing is going to happen on the content moderation question in a way that will truly hold the industry accountable until the responsibility gets pulled away from Facebook,” he told me. “But they also want a robust discussion about it because that will drag on for years and pull away the spotlight from the more necessary set of economic regulations you could consider.”

The U.K. government has already sown some seeds here. Its reports on the sustainability of journalism and unlocking digital competition each cited the need for a study of the digital advertising market. Digital Secretary Jeremy Wright and Chancellor of the Exchequer Philip Hammond have both taken up this call. It’s important that their findings aren’t drowned by other debates. It will be months before a bill on online harms surfaces, tying up the resources of politicians and civil servants alike.

The last thing Facebook and Google want is a government official trying to pick apart their advertising operations. But a body to set standards for content? Sign them up.

To contact the author of this story: Alex Webb at [email protected]

To contact the editor responsible for this story: Jennifer Ryan at [email protected]

This column does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners.

Alex Webb is a Bloomberg Opinion columnist covering Europe’s technology, media and communications industries. He previously covered Apple and other technology companies for Bloomberg News in San Francisco.

<p class="canvas-atom canvas-text Mb(1.0em) Mb(0)–sm Mt(0.8em)–sm" type="text" content="For more articles like this, please visit us at bloomberg.com/opinion” data-reactid=”63″>For more articles like this, please visit us at bloomberg.com/opinion

©2019 Bloomberg L.P.