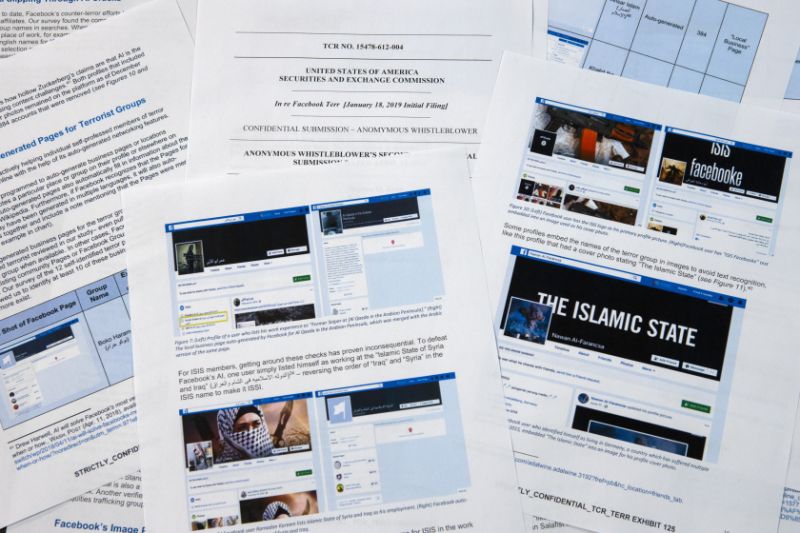

Pages from a confidential whistleblower’s report obtained by The Associated Press are photographed Tuesday, May 7, 2019, in Washington. Facebook likes to give the impression that it’s stopping the vast majority of extremist posts before users ever see them., but the confidential whistleblower’s complaint to the Securities and Exchange Commission alleges the social media company has exaggerated its success. Even worse, it shows that the company is making use of propaganda by militant groups to auto-generate videos and pages that could be used for networking by extremists. (AP Photo/Jon Elswick)

” data-reactid=”17″>

Pages from a confidential whistleblower’s report obtained by The Associated Press are photographed Tuesday, May 7, 2019, in Washington. Facebook likes to give the impression that it’s stopping the vast majority of extremist posts before users ever see them., but the confidential whistleblower’s complaint to the Securities and Exchange Commission alleges the social media company has exaggerated its success. Even worse, it shows that the company is making use of propaganda by militant groups to auto-generate videos and pages that could be used for networking by extremists. (AP Photo/Jon Elswick)

WASHINGTON (AP) — The animated video begins with a photo of the black flags of jihad. Seconds later, it flashes highlights of a year of social media posts: plaques of anti-Semitic verses, talk of retribution and a photo of two men carrying more jihadi flags while they burn the stars and stripes.

It wasn’t produced by extremists; it was created by Facebook. In a clever bit of self-promotion, the social media giant takes a year of a user’s content and auto-generates a celebratory video. In this case, the user called himself “Abdel-Rahim Moussa, the Caliphate.”

“Thanks for being here, from Facebook” the video concludes in a cartoon bubble before flashing the company’s famous ‘thumbs up.’

Facebook likes to give the impression that it’s staying ahead of extremists by taking down their posts, often before users ever even see them.

But a confidential whistleblower’s complaint to the Securities and Exchange Commission obtained by The Associated Press alleges the social media company has exaggerated its success. Even worse, it shows that the company is inadvertently making use of propaganda by militant groups to auto-generate videos and pages that could be used for networking by extremists.

According to the complaint, over a five-month period last year, researchers monitored pages by users who affiliated themselves with groups the U.S. State Department has designated as terrorist organizations. In that period, 38% of the posts with prominent symbols of extremist groups were removed. In its own review, the AP found that as of this month, much of the banned content cited in the study — an execution video, images of severed heads, propaganda honoring martyred militants — slipped through the algorithmic web and remained easy to find on Facebook.

The complaint is landing as Facebook tries to stay ahead of a growing array of criticism over its privacy practices and its ability to keep hate speech, live-streamed murders and suicides off its service. In the face of criticism, Facebook CEO Mark Zuckerberg has spoken of his pride in the company’s ability to weed out violent posts automatically through artificial intelligence.

“In areas like terrorism, for al-Qaida and ISIS-related content, now 99% of the content that we take down in the category our systems flag proactively before anyone sees it,” Zuckerberg said in an earnings call last month. Then he added: “That’s what really good looks like.”

Zuckerberg did not offer an estimate of how much of total prohibited content is being removed. The research behind the SEC complaint — though based on a limited sample size — suggests it is not as much as Facebook has implied. The complaint is aimed at spotlighting the shortfall. Last year, researchers began monitoring users who explicitly identified themselves as members of extremist groups. This wasn’t hard to document. Some of these people even list the extremist groups as their employers.

As a stark indication of how easily users can evade Facebook, one page from a user called “Nawan al-Farancsa” has a header whose white lettering against a black background says in English “The Islamic State.” The banner is punctuated with a photo of an explosive mushroom cloud rising from a city. The page, still up in recent days, apparently escaped Facebook’s systems, because the letters were not searchable text but embedded in a graphic block.

Facebook concedes that its systems are not perfect, but says it making improvements.

“After making heavy investments, we are detecting and removing terrorism content at a far higher success rate than even two years ago,” the company said in a statement. “We don’t claim to find everything and we remain vigilant in our efforts against terrorist groups around the world.”

Facebook says it now employs 30,000 people who work on its safety and security practices, reviewing potentially harmful material and anything else that might not belong on the site. Still, the company is putting a lot of its faith in artificial intelligence and its systems’ ability to eventually weed out bad stuff without the help of humans. The new research suggests that goal is a long way off.

Hany Farid, a digital forensics expert at the University of California, Berkeley, who advises the Counter-Extremism Project, a New York and London-based group focused on combatting extremist messaging, says that Facebook’s artificial intelligence system is failing. He says the company is not motivated to tackle the problem because it would be expensive.

“The whole infrastructure is fundamentally flawed,” he said.

Another Facebook auto-generation function gone awry scrapes employment information from user’s pages to create business pages. The function is supposed to produce pages meant to help companies network, but in many cases the they are serving as a branded landing space for extremist groups. The function has allowed Facebook users to like pages created for al-Qaida, the Islamic State group, the Somali-based al-Shabab and others, effectively providing a list of sympathizers for recruiters.

At the top of an auto-generated page for al-Qaida in the Arabian Peninsula, for instance, the AP found a photo of the bombed hull of the USS Cole — the defining image in AQAP’s own propaganda. The page includes the Wikipedia entry for the group and had been liked by 277 people when last viewed this week.

Facebook also faces a challenge with U.S. hate groups. The researchers in the SEC complaint identified over 30 auto-generated pages for white supremacist groups, whose content Facebook prohibits. They include “The American Nazi Party” and the “New Aryan Empire” A page created for the “Aryan Brotherhood Headquarters” marks the office on a map and asks whether users recommend it. One endorser posted a question: “How can a brother get in the house.”

Add Comment