Imagine you’re an inexperienced techie who wants to apply for a job at a company.

Let’s say you have a roommate or a friend who already has a job and a much better grasp on the hellish concept of job interviews, which make you shudder when you think about them.

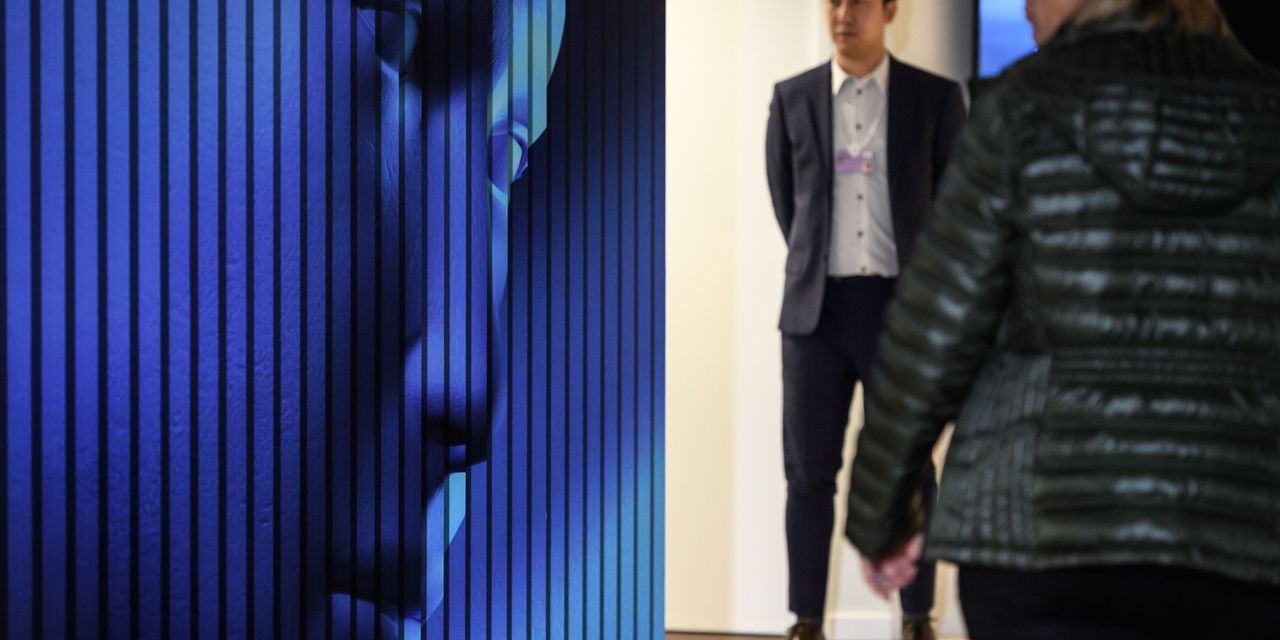

What if you could just slap your face on to his body and have him do the interview instead? As long as no one knew the real tone of your voice, you would be all set. Enter the magical — and often dark — world of deepfakes!

A deepfake is a type of synthetic media in which a face of person in an existing image or a video is replaced with someone else’s likeness. The technology was developed in 1990s, but it became popular much later thanks to amateur online communities.

Deepfakes can range from funny and quirky to sinister and dangerous videos depicting political statements that were never given or events that never took place. It gets worse — deepfakes have been used for creating non-consensual pornography, hoaxes, bullying and more.

However, today I would like to focus on one particular and interesting application of the technology: pretending to be someone else in order to get a job.

Now, you may be wondering why anyone would do that — it seems like too much hassle, and a risky and stressful approach to getting an actual job. Unless … the reason to get a particular job isn’t to acquire gainful employment, but instead to gain access to the company’s infrastructure so you could divulge sensitive information.

In that case, you wouldn’t be a camera-shy introvert, but rather a tech-savvy social engineer/hacker, posing as someone else — someone qualified.

According to the FBI’s Alert published June 28, the number of cases where malicious actors successfully applied for work-at-home positions using stolen PII (Personally Identifiable Information) is on the rise.

This data was most likely acquired by hacking victims’ accounts or even companies’ HR databases.

The FBI warns that hackers use voice spoofing (imitating other person’s voice by using digital-manipulation tools) and some publicly available deepfake tools (DeepFaceLab, DeepFaceLive, FaceSwap, et al.) to fool unwary interviewers.

According to the FBI report, one of the ways to recognize something is afoul is to pay attention to “actions and lip movement of the person seen interviewed on-camera”, which “do not completely coordinate with the audio of the person speaking.”

However, this isn’t a totally foolproof way of detecting deepfakes. Nowadays, a growing number of apps enable seamless, real-time integration into video calls, which results in higher-quality lip syncs. This isn’t to say that deepfake videos are indistinguishable from the real thing.

Aside from imperfections in lip syncing, other giveaway clues include facial discoloration, unnatural blinking patterns, blurring, weird digital background noise and a difference in sharpness and video quality between face and the rest of the video (i.e., the face looks sharper and cleaner than the background image).

Identity theft is nothing new, and tech simply provides hackers with new tools to facilitate the process. As always, the crucial step is training and educating staff to withstand the social engineering part of the attack, namely not to give access to vital company infrastructure to new, unvetted hires.

While meeting the hiree in person is always the best way to ascertain identity, it’s not always possible. In these cases, there are a few things that would mess with the possible deepfake algorithm — asking an interviewee to rotate their body (easily done on a regular office chair), place their face at an awkward angle in front of their camera or place their open palm in front of their face and move the hand at varying speeds, obscuring and showing parts of the face between the fingers. These methods could trick some models into glitching and producing artifacts or blurring that could uncover a deepfake video.

As for audio, watch out for phrasing, choppy sentences and weird tone inflections. Sometimes the entire sentences are pre-synthetized, which can result in canned answers. In this case, watch out for responses that seem out of context — if an interviewee does not answer a question, or answers it in the same way multiple times, this could also be a red flag.

You can be the target of an identity theft even if you’re not an HR representative in your company.

I’ve written articles on how to protect yourself from hacker attacks, so I won’t go into details here. Instead, I will urge you to keep up the usual protective measures and practices, such as having strong and unique passwords for all of your accounts and enabling two-factor authentication (2FA) whenever possible.

In a job-seeking scenario, keep tabs on your communications and reach out via different channels to your potential employer, if possible. A hacker won’t be able to cover all of them, and receiving conflicting information from both you and the hacker will raise HR’s suspicion about a possible imposter.

Being a bit more proactive in this stage of your employment may be all it takes to dispel the illusion, impress your future employer, and get that job instead of your evil, digital doppelganger.

Finally, if you think you might be a victim of identity theft, reach out to your local law enforcement and file a report.

Add Comment